Problem Statement

Autonomous multi-robot systems allow for the automation of processes like warehousing, agricultural harvesting, and even domestic chores.

Given the fact that there may be various types of robots within a multi-robot system, there are usually many different methods of teleoperation involved (e.g. buttons, joysticks, apps).

How can we reduce the number of remote controllers needed to teleoperate multi-robot systems, while also improving the ease of interacting with such systems?

Project Overview

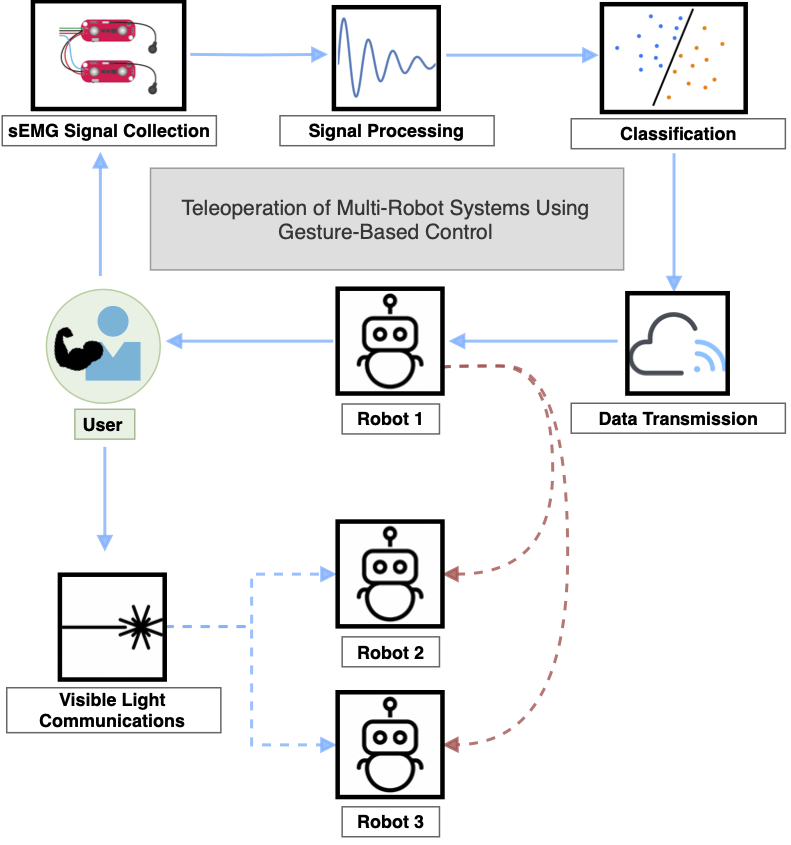

There are two main components of the project: (1) robot selection and (2) robot control. The robot selection is achieved through visible light communications, shown in the bottom half. Robot control is done via myoelectric control, shown in the upper half.

The user can select a robot to control through a visible light communication channel. After selecting a robot, the user can perform hand gestures, which will be read as a collection of sEMG signals, and translated into a command for the robot.

Thesis defense

I defended my thesis on April 2, 2020, over Zoom in front of the Harvard SEAS community. Here’s the recording of the presentation. I talk about the implications of gesture-controlled robotics and how such control systems can be made for multi-robot environments. And of course, present the design that I have been working on.

If you are interested in this project, feel free to check out the poster here!

If you want all the details, read the full report here!